训练数据合成(二)

【本文已在同名 微信公众号 / 知乎 / 个人博客linsight.cn 上线】

继续看一些重要的数据合成工作。

self-instruct

self-instruct算是大模型数据合成的经典工作了,它的目的主要是为了低成本获得大量用于大模型微调的指令数据。self-instruct的整体流程如下:

文中首先定义一下什么“指令数据”。一个“指令”是一个用自然语言描述的任务,用I表示,一个任务下可以有多组input-output的实例。比如

指令I = “写一篇关于以下主题的文章”

输入X = “学校安全”

输出Y就是对应话题的一篇文章。

不过在很多情况下,“指令”和“实例”并没有很明确的区分界限,“指令”本身就可以是一个实例。比如这样一个指令“写一篇关于学校安全的文章”就即是指令又是实例,它作为指令时输入X就为空了。

回到self-instruct指令数据合成的流程上来,共分四步。

1、Instruction Generation

一开始,先收集175个人类编写的指令数据来作为task pool的初始化。每步生成时,从这个池子里随机采样8个instruction作为in-context example,这8个example会混合人工数据和之前轮次的合成数据,prompt如下:

Come up with a series of tasks:

Task 1: {instruction for existing task 1}

Task 2: {instruction for existing task 2}

Task 3: {instruction for existing task 3}

Task 4: {instruction for existing task 4}

Task 5: {instruction for existing task 5}

Task 6: {instruction for existing task 6}

Task 7: {instruction for existing task 7}

Task 8: {instruction for existing task 8}

Task 9:这个开放式的prompt允许模型一次生成多个结果,实验设置上以达到最大长度,或者生成到“Task 16”的时候为止。

2、Classification Task Identification

这里把任务分成了两类,分类任务和非分类任务。这里需要先判断任务属于哪一类。不过在目前阶段,似乎不太需要再区分这样的任务了,而且分类任务在大模型应用中应该也越来越少了,而复杂任务则是更多了。

这里用few-shot prompt的方式让大模型做判断:

Can the following task be regarded as a classification task with finite output labels?

Task: Given my personality and the job, tell me if I would be suitable.

Is it classification? Yes

Task: Give me an example of a time when you had to use your sense of humor.

Is it classification? No

Task: Replace the placeholders in the given text with appropriate named entities.

Is it classification? No

Task: Fact checking - tell me if the statement is true, false, or unknown, based on your

knowledge and common sense.

Is it classification? Yes

Task: Return the SSN number for the person.

Is it classification? No

...

Task: {instruction for the target task}3、Instance Generation

给定指令和任务类型(分类任务还是非分类任务),让模型生成具体的instance,包括输入和输出。这里有几点经验:

- 所使用的few-shot

example不要和要生成的指令属于同一类任务效果更好,这里这样做可以增加生成的多样性

-

生成的时候,一般来说是让模型先生成输入再生成输出,但是发现总是使用这个顺序的话,模型会倾向于在分类任务中总是生成某一个标签的实例,所以也可以调整顺序,让模型先生成输出再生成输入,以打破这种有偏的行为。

4、Filtering and Postprocessing

生成的数据难免会和数据库里的存在相似的情况,为了增加数据多样性,只保留和所有已有数据的ROUGE-L相似度低于0.7的数据,并添加到task pool中。也可以用其他相似度计算方式。

另外有时模型生成的任务可能要求一些LLM无法完成的事情,比如和多媒体相关的任务,这些指令就需要通过关键词过滤掉了。

evol-instruct

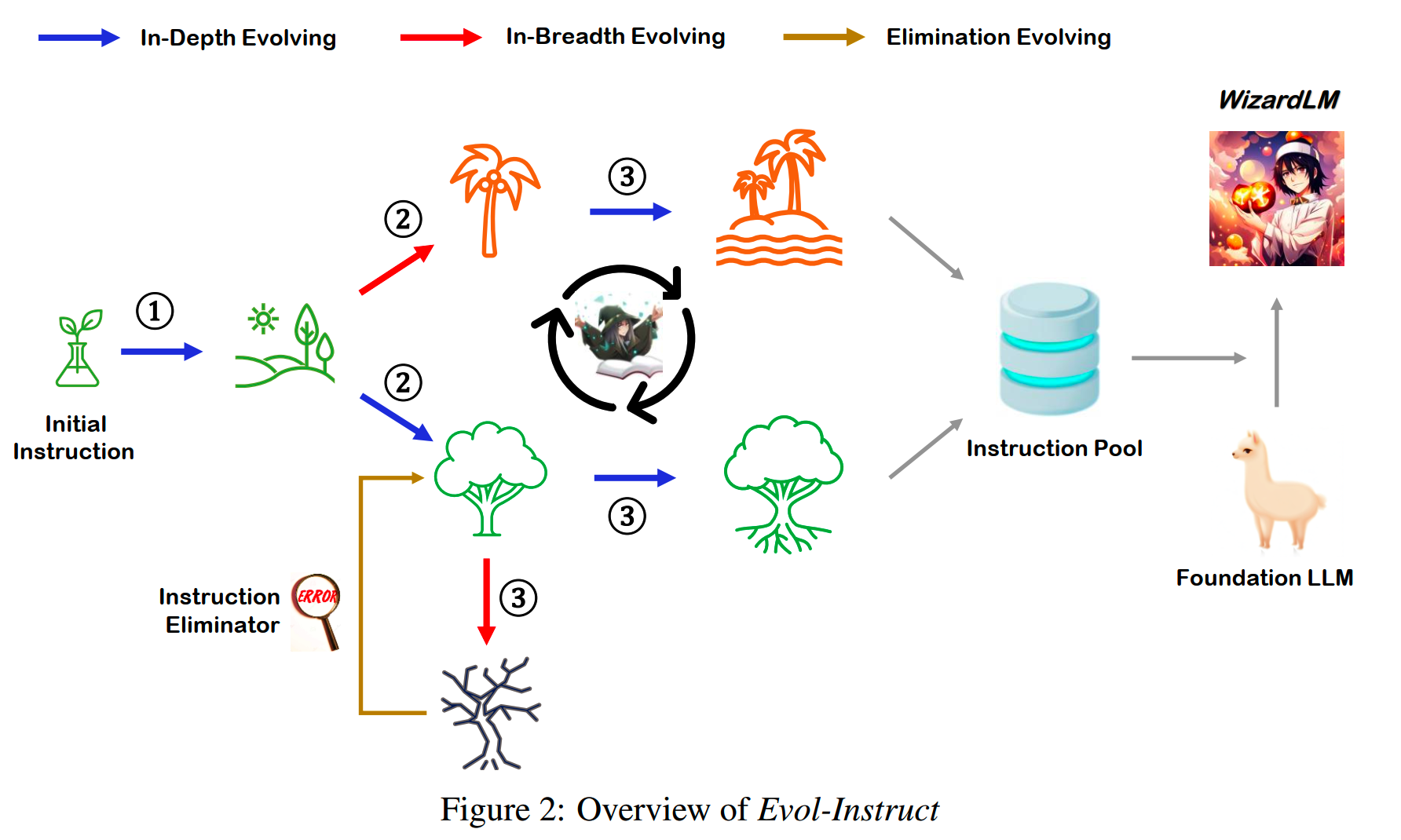

evol-instruct是提升现有指令数据多样性和难度的方法,重点在数据质量而不在数据数量。evol-instruct的整体流程如下:

从最开始的原始数据集D_0开始,每轮指令进化会把D_t(第t轮的数据)中所有指令数据都进化,得到新的数据集D_t+1,那么在进化M次之后,就会得到{D_0,

D_1, D_2,...,D_M}这个多个数据集。

evol-instruct主要包含三个步骤:(1) instruction evolving, (2) response

generation, 以及 (3) elimination evolving。

1、Instruction Evolution

每一轮进化中,会使用LLM在现有指令的基础上提高难度,或者增加多样性。指令进化成功就会把新指令加入到新数据池中,如果失败就会把原指令放回,在下一轮的时候重新处理。

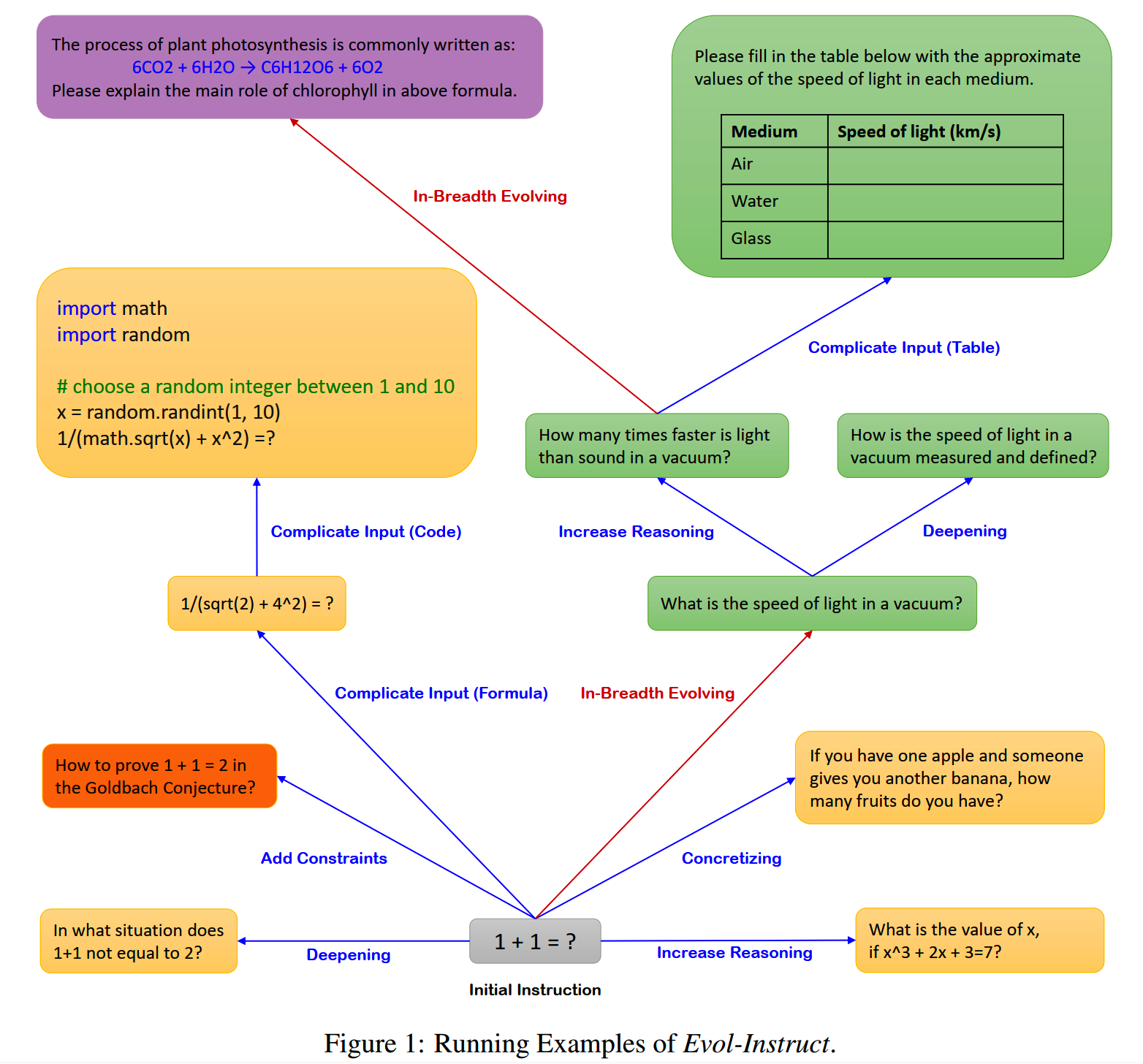

Instruction Evolution分成两种类型,in-depth evolving和in-breadth evolving。

(1)in-depth evolving 深度进化

深度进化的目的是提升指令的难度,共有5种类型的prompt,代表着5个不同的具体方向:add constraints, deepening, concretizing, increased reasoning steps, 以及 complicating input。

深度进化要求每次进化的难度“困难一点点”,并限制最多增加10~20个单词,不能一下子变得太困难,导致出现大部分人类无法理解的内容。

各种prompt具体如下:

a.add constraints

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

Your rewriting cannot omit the non-text parts such as the table and code in #Given Prompt#:. Also, please do not omit the input in #Given Prompt#.

You SHOULD complicate the given prompt using the following method:

Please add one more constraints/requirements into #Given Prompt#

You should try your best not to make the #Rewritten Prompt# become verbose, #Rewritten Prompt# can only add 10 to 20 words into #Given Prompt#.

‘#Given Prompt#’, ‘#Rewritten Prompt#’, ‘given prompt’ and ‘rewritten prompt’ are not allowed to appear in #Rewritten Prompt#

#Given Prompt#:

<Here is instruction.>

#Rewritten Prompt#:b.deepening

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

Your rewriting cannot omit the non-text parts such as the table and code in #Given Prompt#:. Also, please do not omit the input in #Given Prompt#.

You SHOULD complicate the given prompt using the following method:

If #Given Prompt# contains inquiries about certain issues, the depth and breadth of the inquiry can be increased. or You should try your best not to make the #Rewritten Prompt# become verbose, #Rewritten Prompt# can only

add 10 to 20 words into #Given Prompt#.

‘#Given Prompt#’, ‘#Rewritten Prompt#’, ‘given prompt’ and ‘rewritten prompt’ are not allowed to appear in #Rewritten Prompt#

#Given Prompt#:

<Here is instruction.>

#Rewritten Prompt#:c.concretizing

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

Your rewriting cannot omit the non-text parts such as the table and code in #Given Prompt#:. Also, please do not omit the input in #Given Prompt#.

You SHOULD complicate the given prompt using the following method:

Please replace general concepts with more specific concepts. or You should try your best not to make the #Rewritten Prompt# become verbose, #Rewritten Prompt# can only add 10 to 20 words into #Given Prompt#.

‘#Given Prompt#’, ‘#Rewritten Prompt#’, ‘given prompt’ and ‘rewritten prompt’ are not allowed to appear in #Rewritten Prompt#

#Given Prompt#:

<Here is instruction.>

#Rewritten Prompt#:d.increased reasoning steps

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

Your rewriting cannot omit the non-text parts such as the table and code in #Given Prompt#:. Also, please do not omit the input in #Given Prompt#.

You SHOULD complicate the given prompt using the following method:

If #Given Prompt# can be solved with just a few simple thinking processes, you can rewrite it to explicitly request multiple-step reasoning.

You should try your best not to make the #Rewritten Prompt# become verbose, #Rewritten Prompt# can only add 10 to 20 words into #Given Prompt#.

‘#Given Prompt#’, ‘#Rewritten Prompt#’, ‘given prompt’ and ‘rewritten prompt’ are not allowed to appear in #Rewritten Prompt#

#Given Prompt#:

<Here is instruction.>

#Rewritten Prompt#:e.complicating input

和前面几种prompt有所不同,complicating input需要使用few-shot prompt。

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

You must add [XML data] format data as input data in [Rewritten Prompt]

#Given Prompt#:

<Here is Demonstration instruction 1.>

#Rewritten Prompt#:

<Here is Demonstration Example 1.>

... N -1 Examples ...

I want you act as a Prompt Rewriter.

Your objective is to rewrite a given prompt into a more complex version to make those famous AI systems (e.g., ChatGPT and GPT4) a bit harder to handle.

But the rewritten prompt must be reasonable and must be understood and responded by humans.

You must add [#Given Dataformat#] format data as input data, add [#Given Dataformat#] code as input code in [Rewritten Prompt]

Rewrite prompt must be a question style instruction

#Given Prompt#:

<Here is instruction.>

#Rewrite prompt must be a question style instruction Rewritten Prompt(MUST contain a specific JSON data as input#:(2)in-breadth evolving 广度进化

广度进化目的是增加topic的覆盖,提升数据集的多样性。prompt如下:

I want you act as a Prompt Creator.

Your goal is to draw inspiration from the #Given Prompt# to create a brand new prompt.

This new prompt should belong to the same domain as the #Given Prompt# but be even more rare.

The LENGTH and difficulty level of the #Created Prompt# should be similar to that of the #Given Prompt#.

The #Created Prompt# must be reasonable and must be understood and responded by humans.

‘#Given Prompt#’, ‘#Created Prompt#’, ‘given prompt’ and ‘created prompt’ are not allowed to appear in #Created Prompt#.

#Given Prompt#:

<Here is instruction.>

#Created Prompt#:下面是一个各种方向进化的例子:

2.Response Generation

response的生成就比较常规了,把前面进化出来的prompt输入给LLM即可。

3.Elimination Evolving

有了进化好的prompt和response还不算完。有时候进化会出现失败的情况。进化失败的case会放弃进化的结果,而把原数据用于下次进化。

什么样的情况算是进化失败呢?主要有下面四种。

(1)进化后的指令相比原指令没有任何information gain,这个可以通过prompt让ChatGPT进行判断:

Here are two Instructions to ChatGPT AI, do you think they are equal to each other, which meet the following requirements:

1. They have same constraints and requirments.

2. They have same depth and breadth of the inquiry.

The First Prompt: <Here is first instruction.>

The Second Prompt: <Here is second instruction.>

Your Judgement (Just answer: Equal or Not Equal. No need to explain the reason.):(2)进化后的指令让LLM给不出response,比如生成的包含“抱歉”,且长度较短。

(3)response的生成异常的,比如只有标点符号,内容为空等。

(4)进化后的指令质量不好,复制了进化prompt中的一些内容,比如“#Rewritten Prompt#”等。

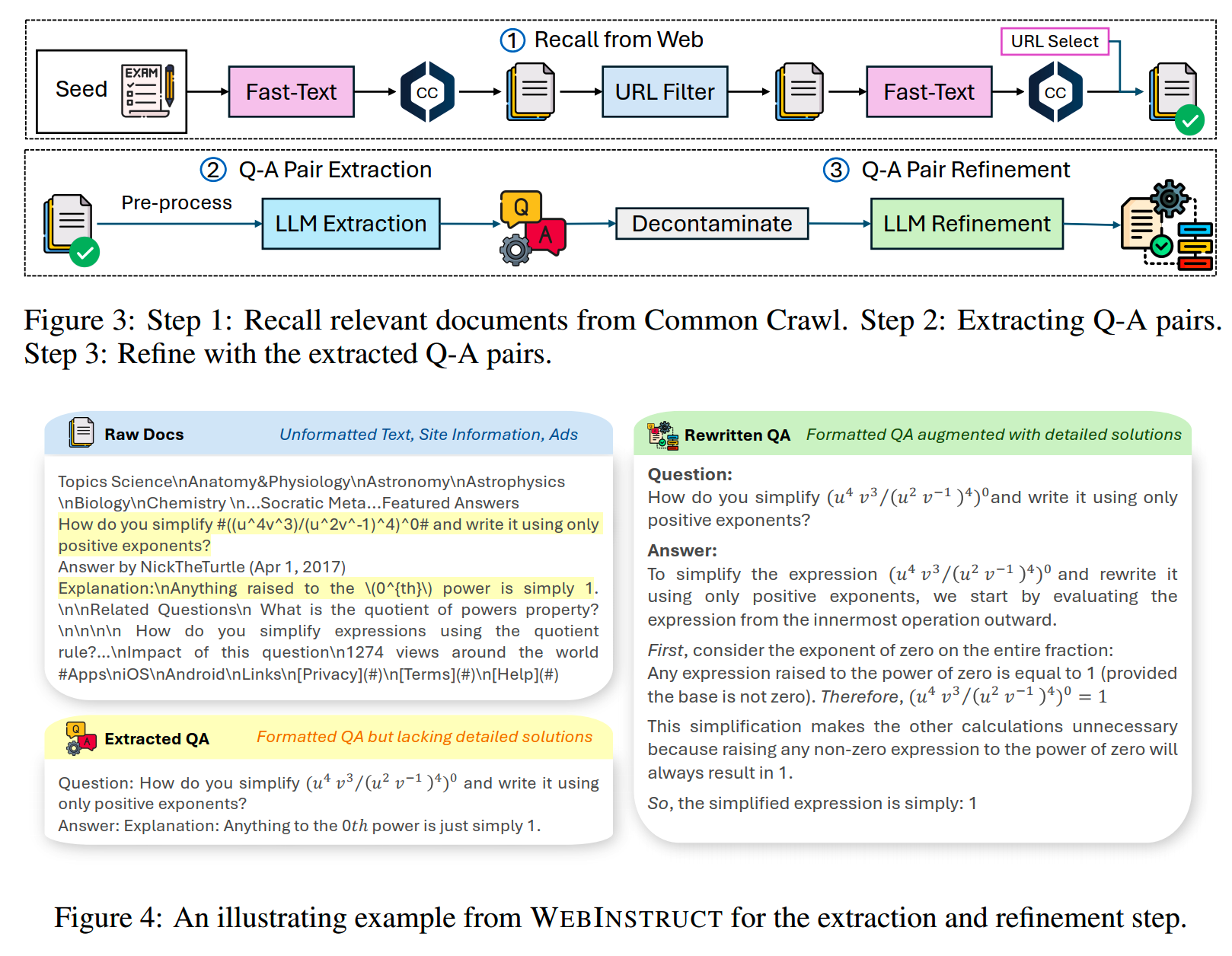

MAmmoTH2

《MAmmoTH2: Scaling Instructions from the Web》里介绍了从web数据构建instruction数据的做法,获得了用于微调LLM的WebInstruct数据集。不过与其说是数据合成,这里的做法更多的是和数据抽取相关。

构建WebInstruct主要包括三步:

1、文档抽取

使用涵盖想要的领域的种子数据(比如数学),以及从web数据中随机抽取的100k负样本,训练一个fasttext分类器。这个分类器用于从web数据中recall想要的领域数据。召回的文档会根据url(domain)做分组,一个domain如果最终文档量少于1000个,则不会被保留。

最终从common crawl里recall了大约100B的数据。

2、Q-A Pair Extraction

web数据里有很多QA数据,但是会和其他内容混杂在一起,比如广告、网页模板等,因此先用规则进行简单清洗之后,再用Qwen-72B从这些文档里提取相对干净的QA内容。

3、Q-A Pair Refinement

第二步里抽取的QA质量不一定好(其实很多是不好的),因此需要对这些QA进行改写。比如如果Answer里没有包含完整的solution,就用LLM对这些内容进行补全。

GENIE

GENIE = Generate information & elucidate,主要是合成content-grounded generation task相关的数据,比如长文本问答LFQA、摘要和信息提取等任务。

GENIE合成数据的流程其实相当简单,就是(1)准备content数据(2)生成任务相关内容(3)检查过滤,这里主要看一下细节。

1、准备content数据

这一步是从网页抽取文档的过程,会保留文档结构,比如列表、表格、章节信息等,文档用markdown格式保存。实验中使用的是NQ passages数据集。

2、生成任务相关内容

根据文档,使用few-shot prompt获得问答QA、摘要等任务数据。prompt如下:

Instruction: Given the next [document], create a [question] and [answer] pair that are grounded in the main point of the document, don't add any additional information that is not in the document. The [question] is by an information-seeking user and the [answer] is provided by a helping AI Agent.

[document]: Scrumptious Sweet Co. factory ..

### Response:

[question]: Where was the movie Chitty Chitty Bang Bang filmed?

[answer]: Chitty Chitty Bang Bang was filmed in a variety of locations in England and Germany. Some ..

[document]: The series stars Eugene Levy as Johnny Rose …

### Response:

[question]: What is the plot of the show Schitt's Creek?

[answer]: The show Schitt's Creek is about a wealthy family who loses their fortune and is forced to rebuild their lives in a small town. The show follows the family as they adjust to their new life in the town and learn to appreciate the simple things in life.

[document]: 2016's countdown broke several Hottest 100 records…

### Response:

[question]: What was the most popular song on the 2016 Hottest 100?

[answer]: The most popular song on the 2016 Hottest 100 was "Never Be Like You" by Flume. This was the first time that an electronic dance music producer topped the countdown.

[document]: In Greek mythology, Persephone ..

### Response:

[question]: Who is Persephone in Greek mythology and what is her significance?

[answer]: In Greek mythology, Persephone, also known as Kore, is the daughter of Zeus and Demeter. She ..

[document]: Terry, the dog that played Toto in the 1939 screen …

### Response:

[question]: What breed of dog was Toto in the Wizard of Oz?

[answer]: Terry, the dog that played Toto in the 1939 screen adaptation of The Wizard of Oz, was a brindle Cairn terrier.3、检查过滤

(1)格式

对于生成的结果太短,或者太长(摘要不能比原文还长吧)的结果进行过滤。

(2)Faithfulness

使用NLI模型,把原文档和问答、摘要内容进行评测,确定二者是否有逻辑关系。

(3)Quality

使用reward模型对数据进行打分,所用的模型是reward-model-deberta-v3-large-v2。

Orca-Math

Orca-Math里合成了包含20w个数学问题的Orca-Math-dataset,把SLM的GSM8k pass@1做到了81.50%。

Orca-Math合成数据的目的是获得一批多样化的小学数学应用题,包括简单和困难的问题。为了合成数据,首先参照《Lila: A unified benchmark for mathematical reasoning》的基准从各种开源的数据集收集了36,217个问题。

1、Ask Me Anything agent

这个种子问题集通过下面的prompt扩展更多的问题,论文中把使用这个prompt专门用于生成问题的模型称为“Ask Me Anything” agent:

Your goal is to create multiple word problems from a given word problem and its answer. First convert the question of the word problem into a statement.

Then for each number in the converted problem create a new word problem.

Here are some examples:

Example 1: Q: Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Answer: 72

Replacing question with statement: Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. Natalia sold altogether 72 clips in April and May.

All questions:

<target> 48

<question> Natalia sold clips to some of her friends in April, and then she sold half as many clips in May. Natalia sold altogether 72 clips in April and May. How many clips did she sell in April?

<target> half

<question> Natalia sold clips to 48 of her friends in April, and then she sold some clips in May. Natalia sold altogether 72 clips in April and May. What is the ratio of the number clips sold in April to number clips sold in May?

<target> 72

<question> Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?

Example 2: Q: Weng earns $12 an hour for babysitting. Yesterday, she just did 50 minutes of babysitting. How much did she earn?

Answer: 10

Replacing question with statement: Weng earns $12 an hour for babysitting. Yesterday, she just did 50 minutes of babysitting. She earned $10.

All questions:

<target> 12

<question> Weng earns a certain amount per hour for babysitting. Yesterday, she just did 50 minutes of babysitting and earned 10. How much does she earn per hour?

<target> 50

<question> Weng earns 12 an hour for babysitting. Yesterday, she just did some babysitting and earned 10. How much time did she spend on babysitting?

<target> 10

<question> Weng earns 12 an hour for babysitting. Yesterday, she just did 50 minutes of babysitting. How much did she earn?

Example 3: Q: Betty is saving money for a new wallet which costs 100. Betty has only half of the money she needs. Her parents decided to give her 15 for that purpose, and her grandparents twice as much as her parents. How much more money does Betty need to buy the wallet?

Answer: 5

Replacing question with statement: Betty is saving money for a new wallet which costs 100. Betty has only half of the money she needs. Her parents decided to give her 15 for that purpose, and her grandparents gave her twice as much as her parents. She needs 5 more to buy the wallet.

All questions:

<target> 100

<question> Betty is saving money for a new wallet. Betty has only half of the money she needs. Her parents decided to give her 15 for that purpose, and her grandparents twice as much as her parents. She needs 5 more to buy the wallet. What is the cost of the wallet?

<target> half

<question> Betty is saving money for a new wallet which costs 100. She has some money saved, her parents decided to give her 15, and her grandparents gave her twice as much as her parents. Now, Betty needs 5 more to buy the wallet. What is the ratio of the money Betty have saved initially to the cost of wallet?

<target> 15

<question> Betty is saving money for a new wallet which costs 100. She has half of the money she needs, her parents decided to give her some money, and her grandparents gave her twice as much as her parents. Now, Betty needs 5 more to buy the wallet. How much money did her parents give her?

<target> twice

<question> Betty is saving money for a new wallet which costs 100. Betty has only half of the money she needs. Her parents decided to give her 15 for that purpose, and her grandparents also chipped in. Now, Betty needs 5 more to buy the wallet. What is the ratio of the amount given by her grandparents to the amount given by her parents?

<target> 5

<question> Betty is saving money for a new wallet which costs 100. Betty has only half of the money she needs. Her parents decided to give her 15 for that purpose, and her grandparents twice as much as her parents. How much more money does Betty need to buy the wallet?

Now solve this:

Example 4: Q: Your teacher is giving a test worth 200 points. There is a total of 30 5-point and 10-point questions. How many 5-point questions are on the test? Answer: 20“Ask Me Anything” agent最终从种子数据生成了120,445个问题。

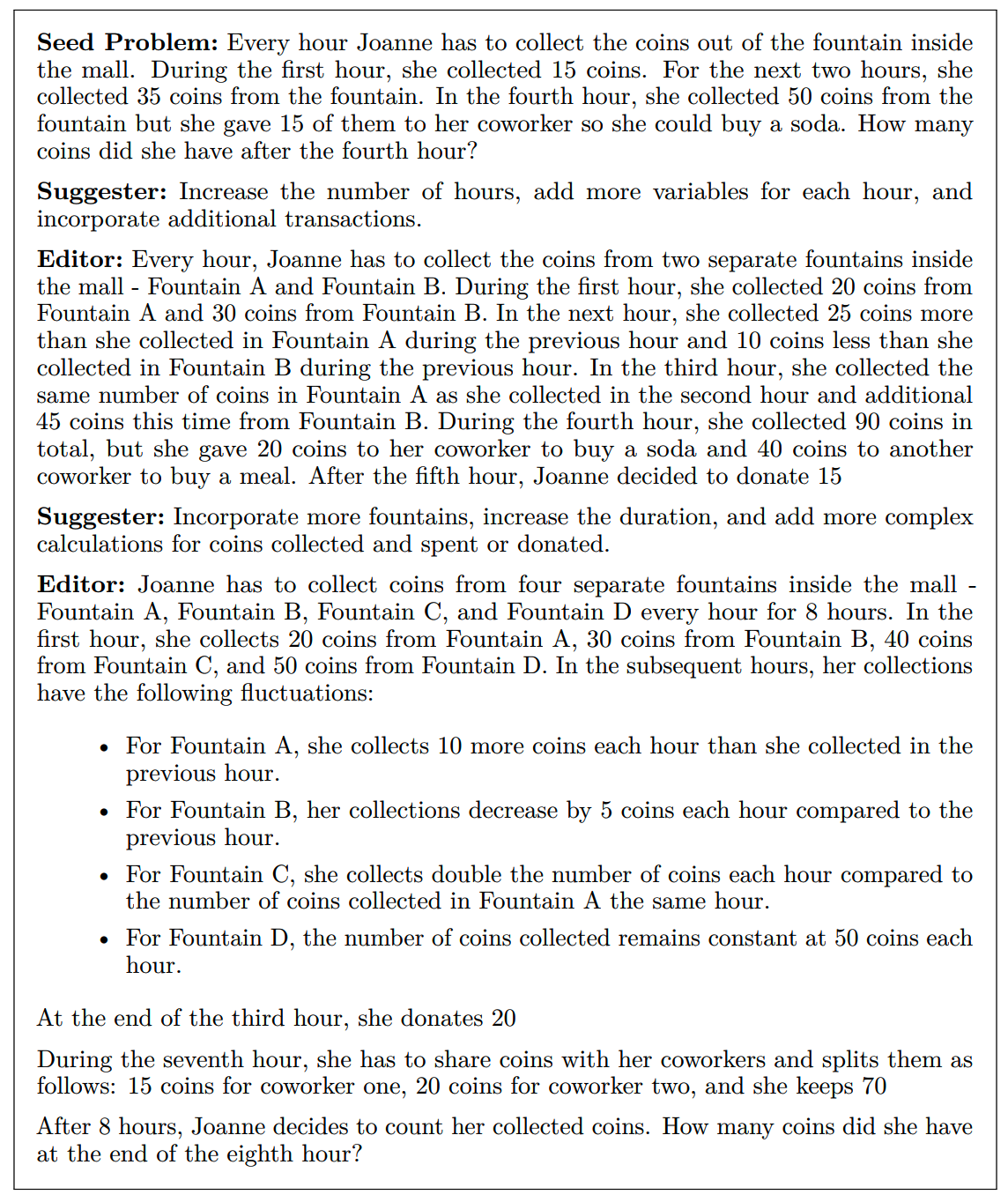

2、Suggester & Editor

为了增加问题的难度,引入了Suggester & Editor。

Suggester提出一些抽象、high level的建议,而Editor就根据现有的问题和Suggester的建议来修改问题。一个示例如下:

小结

- 其实很多数据合成的工作在prompt的设计上,需要根据所用的模型多次迭代

- 多样性是数据合成不变的核心,无论是使用多样的种子、检索的few-shot

prompt还是多次采样都是为了得到更加拟合真实世界的多样化的数据

- 后续有一些更加自动化的做法,下一篇来讲

博客:http://www.linsight.cn/

知乎:Linsight

微信公众号:Linsight

博主微信号(添加请注明来意):

博主微信号(添加请注明来意):

【推荐文章】

- MoE:

MoE模型的前世今生

DeepSeek-V2和MLA

昆仑万维-SkyworkMoE

成本10w刀的JetMoE

MoE的top-p

routing

对MoE模型的一些观察

从dense到MoE -- sparse

upcycling

MoE路由--expert choice

routing

- 端侧模型:

苹果智能系统模型--AFM

MiniCPM

适合移动设备的语言模型--MobileLLM

phi系列模型

Gemma2

苹果的OpenELM

bilibili的index-1.9B

- 预训练:

代码大模型(一)--业界现状

代码大模型(二)--OpenCoder

LLM高效预训练(一)

LLM高效预训练(二)

Llama3.1--预训练要点一览

Qwen2技术报告

Yi技术报告-划重点看细节

InternLM系列模型

GLM4报告的一些技术点

从Yuan2.0到Yuan2.0-M32

从loss视角理解大模型涌现能力

- 数据:

训练数据合成(一)

LLM预训练数据策略(一)

预训练数据处理--长度分解

- 长上下文:

LLM长上下文的问题

解锁大模型长上下文能力

大模型推理窗口-从有限到无限大

- 推理加速:

大模型推理加速-投机解码

大模型推理加速-MEDUSA

- 对齐:

Llama3.1--post-training要点一览

模型平均 -- model

soup

大模型偏好对齐-DPO

大模型偏好对齐-ODPO

大模型偏好对齐-simPO

大模型偏好对齐-IPO

- Transformer:

理解Attention:从起源到MHA,MQA和GQA

LLM的重复生成和ICL

transformer中normalization的二三事

从代码实现看normalization-到底做了什么

稀疏注意力计算:sliding

window attention

理解LLM位置编码:RoPE

RoPE的远距离衰减

- 项目应用:

一个模型支持智能助手系统

- CV:

CV入门--关于Vision

Transformer

CV入门--无监督学习

- 多模态:

多模态入门--CLIP

- 大模型算法题:

(1)、 (2)、 (3)、 (4)、 (5)、 (6)、 (7)、 (8)、 (9)

Reference

【1】SELF-INSTRUCT: Aligning Language Models with Self-Generated

Instructions https://arxiv.org/abs/2212.10560

【2】Alpaca: A Strong, Replicable Instruction-Following Model

https://crfm.stanford.edu/2023/03/13/alpaca.html

【3】WizardLM: Empowering Large Language Models to Follow Complex

Instructions https://arxiv.org/abs/2304.12244

【4】MAmmoTH2: Scaling Instructions from the Web

https://arxiv.org/abs/2405.03548

【5】Genie: Achieving Human Parity in Content-Grounded Datasets

Generation https://arxiv.org/abs/2401.14367

【6】Orca-Math: Unlocking the potential of SLMs in Grade School Math

https://arxiv.org/abs/2402.14830